Provision a Redis cluster on AWS using Terraform

Learn how you can enable developers to deploy a Redis instance for Workloads in production, using a Terraform definition of an Amazon Elasticache Redis Resource.

On this page

Introduction

The ability to offer Redis to your developers in production covers many use cases, such as devs moving existing apps to your new platform, adding a new Redis to an existing Workload, or scaffolding a new service that depends on Redis.

This tutorial offers a step-by-step guide showing you how to make use of Humanitec’s Resource management capabilities, which will allow you to govern and maintain one set of config files rather than hundreds. Your developers will be able to use a Workload specification such as Score and state “my Workload requires Redis”. If they deploy this to production, your default Redis definition will be executed and the specific Redis instance will be created or updated.

Our cloud of choice is AWS. We will use Elasticache for Redis as the managed Redis service.

If your general configuration of “Redis in production” should change (upgrades, changes to the security posture etc.) this change only has to be done in one place and rolled out to all running Redis instances. Another benefit is the increased productivity of removing the manual step of running Terraform when doing app deployments. The developer does not need to open tickets or understand the resource provisioning process in order to deploy their app. This concept of dynamically creating app- and infrastructure configurations with every deployment is called “Dynamic Configuration Management”.

Prerequisites

To get started with this tutorial, you will need:

- A basic understanding of Terraform

- A basic understanding of resource management in Humanitec

- A basic understanding of Git

- The Humanitec CLI (humctl) installed

- A Humanitec account with Admin permissions

- A sample Application configured in your Humanitec organization with an environment of type

production.- Do not use an Application that is actually live!

- See this tutorial for setting up a sample Application.

- To quickly create another Environment for an Application, see Environments.

- An AWS account

- An Amazon EKS cluster

- The API server endpoint must be reachable from the Platform Orchestrator. You can find the public IPs of the Humanitec SaaS solution here for a source IP based access restriction.

- An Amazon VPC & subnet to deploy a demo Redis resource into

- VPC networking and security set up so that:

- the security groups allow access on TCP port 6379 from the EKS node VPC to the Redis instance VPC

- there is a network route from the EKS node VPC to the Redis instance VPC and vice versa (!)

- (Optional) The AWS CLI installed locally

- (Optional) kubectl installed locally and a

kubeconfigsetup connecting to your EKS cluster

Request a Redis instance using Score

You want to get to a state where developers only need to specify that they need a Redis in general terms, independent of a specific Environment. They’ll usually use a Workload specification like Score to do this. Here’s how such a Score file for a Workload would look like:

apiVersion: score.dev/v1b1

metadata:

name: redis-commander

# Define the ports that this service exposes

service:

ports:

rediscommander:

port: 80 # The port that the service will be exposed on

targetPort: 8081 # The port that the container will be listening on

# Define the containers that make up this service

containers:

redis-commander:

image: rediscommander/redis-commander:latest

variables:

REDIS_HOST: ${resources.redis.host}

REDIS_PORT: ${resources.redis.port}

REDIS_PASSWORD: ${resources.redis.password}

# Define the resources that this service needs

resources:

redis: # We need a Redis to store data

type: redis

Note that the resources section indicates the general dependency on a Redis resource.

The developers shouldn’t need to bother about the implementation details. All they have to do is to send this Score file to the Orchestrator and a Redis instance is updated or created.

In order to achieve this, you need to “teach” the Orchestrator how to create/update this resource with every single deployment. Here’s how:

- First, write our perfect default configuration for Redis in production using Terraform.

- Then write Resource Definitions telling the Orchestrator what (Redis), when (when environment = production) and how to create/update Redis (Terraform). The Resource Definition is what resolves everything and makes the Orchestrator execute the right thing, at the right time, the right way.

- Finally and to verify this worked, you’ll run the Score file shown above, let the Orchestrator deploy everything and examine the result.

This diagram shows all the components working together:

%%{ init: { 'flowchart': { 'curve': 'stepBefore' } } }%%

flowchart LR

%% need to pad title with whitespace to make all text visible

subgraph score [fa:fa-file-code Score file ]

direction TB

workload([Workload\nredis-commander]) -.- resource([Resource\ntype: redis])

end

resdeffileeks["fa:fa-file-code Resource Definition File \nk8s-cluster"] -->|humctl apply| resdefk8s

resdeffileredis["fa:fa-file-code Resource Definition File \nredis"] -->|humctl apply| resdefredis

subgraph orchestrator [Platform Orchestrator]

direction TB

subgraph application [Application]

direction TB

envproduction(["Environment\n"production""])

end

resdefk8s([Resource Definition\ntype: k8s-cluster])

resdefredis([Resource Definition\ntype: redis])

end

score -->|humctl score deploy| envproduction

%% need to pad title with whitespace to make all text visible

subgraph aws [fab:fa-aws AWS ]

direction TB

subgraph vpceks [VPC]

direction TB

subgraph eks [EKS]

direction TB

rediscommander(["Container\n"redis-commander""])

end

end

subgraph vpcredis [VPC]

direction TB

elasticacheinstance([Elasticache\nfor Redis])

end

rediscommander -.- elasticacheinstance

end

orchestrator -->|deploy| aws

%% use invibile link to tweak layouting

resdefredis ~~~ aws

class application,vpceks,vpcredis nested

Prepare your local environment

Prepare these environment variables for the upcoming steps:

export HUMANITEC_ORG=<my-org-id> # Your Humanitec organization id, all lowercase

export HUMANITEC_TOKEN=<my-api-token> # An API token for your Humanitec organization

export APP_ID=<my-app-id> # The Application ID of your target Humanitec Application, all lowercase

export VPC_ID=<my-vpc-pd> # The ID of your target VPC for the Redis instance

export SUBNET_ID=<my-subnet-id> # The ID of your target subnet for the Redis instance

export REGION=<my-region> # The AWS region of your target VPC for the Redis instance

export CLUSTER_NAME=<my-eks-cluster-name> # The name of your EKS cluster

export CLUSTER_REGION=<my-eks-cluster-region> # The region of your EKS cluster

export AWS_ACCESS_KEY_ID=<my-access-key-id> # An AWS access key ID for an IAM user

export AWS_SECRET_ACCESS_KEY=<my-secret-key> # An AWS secret access key for an IAM user

The Application with ID APP_ID must exist in your Humanitec Organization and have an environment of type production.

AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY are the credentials Terraform will use to authenticate against AWS. The issuing IAM user needs to have sufficient permissions to create an Elasticache instance. (Note: It is possible to use different authentication methods such as workload identity to authenticate with AWS rather than providing long lasting credentials. We are deliberately keeping it simple here.)

Provide Terraform code for Redis

Start by configuring how exactly the Redis resource in production should look. You do this using Terraform. You can find our examples in this GitHub repository. You do not need to clone it for this tutorial.

The repo contains a relatively straightforward configuration of a Redis instance in main.tf. You’ll later point the Platform Orchestrator to this code when it comes to actually instantiating a Redis resource.

What is important to note are the output and input variables. Output variables will make information available to our developer’s applications, for example the Redis host and port:

output "host" {

value = aws_elasticache_cluster.redis_cluster.cache_nodes.0.address

}

output "port" {

value = aws_elasticache_cluster.redis_cluster.cache_nodes.0.port

}

By specifying a host and a port you’ll allow developers to access these variables in their Score files. It is best practice to follow the standard Resource Type outputs for the type you are configuring.

You can also use input variables for providing a context about the deployment that is using your resource definition to your Terraform code, such as the name of the Application being deployed, or the Environment.

In your Terraform code, use variables to generate a unique name for the Redis instance:

variable "environment" {

description = "The environment name."

type = string

}

variable "app_name" {

description = "The application name."

type = string

}

variable "resource_name" {

description = "The name of the resource."

type = string

}

locals {

env_id = substr(var.environment, 0, min(15, length(var.environment)))

app_id = substr(var.app_name, 0, min(15, length(var.app_name)))

res_id = substr(split(".", var.resource_name)[3], 0, min(15, length(var.resource_name)))

cluster_id = replace(lower("${locals.env_id}-${locals.app_id}-${locals.res_id}"), "_", "-")

}

An example Redis instance could be named production-my-app-928281.

It is important to make sure the generated cluster_id is a valid cluster id according to the AWS Elasticache specification.

backend definition. The Terraform state file is therefore handled by the Orchestrator and is not visible to you. You will want to define a backend using remote storage that you control in a real life scenario. Read more about this in Terraform driver.Create Resource Definition for Redis

So now you know HOW the Redis instance is supposed to be created in general, but we’re still missing some bits to make this generally available. The Platform Orchestrator needs to know WHAT has to be created to match the request (the Resource Type) and WHEN it’s supposed to use it (the Matching Criteria). In other words: what is the context the Score file could hit the Platform Orchestrator with, that would lead to it executing the Terraform we just wrote?

You’ll also need to configure how you pass on the Input into the Terraform code AND how to actually execute the Terraform. Finally, you’ll need to collect the output..

You can do all of this by configuring a Resource Definition using the Terraform Driver to make the association to the Terraform code file. Remember: the Resource Definition defines the WHAT (resource of type Redis), the WHEN (env=production), and the HOW (using the Terraform driver and the input to pass on).

Next, create the Resource Definition using the Humanitec CLI (humctl). Create the manifest:

cat << EOF > resource-definition-redis.yaml

apiVersion: entity.humanitec.io/v1b1

kind: Definition

metadata:

id: production-redis

entity:

type: redis

name: Production-Redis

driver_type: ${HUMANITEC_ORG}/terraform

driver_inputs:

values:

source:

url: https://github.com/Humanitec-DemoOrg/redis-elasticache-example.git

rev: refs/heads/main

variables:

vpc_id: ${VPC_ID}

subnet_ids: ["${SUBNET_ID}"]

region: ${REGION}

environment: \${context.env.id}

app_name: \${context.app.id}

resource_name: \${context.res.id}

secrets:

variables:

credentials:

access_key: ${AWS_ACCESS_KEY_ID}

secret_key: ${AWS_SECRET_ACCESS_KEY}

criteria:

- env_type: production

EOF

Let’s dissect the Resource Definition:

The what is matching the abstract request in the Score file to make the Orchestrator consider this resource definition if type: redis.

The when is referencing the criteria the Orchestrator should use to match this Resource Definition. In this case it is

criteria:

- env_type: production

In combination, the Orchestrator can match this Resource Definition as it specifies it must only be used when you’re running a production deployment and need a Resource of type redis.

The how explains how to exactly create this Redis instance. The driver_type: my-humanitec-org/terraform tells us what Driver will execute the Terraform files referenced in the driver inputs at

url: https://github.com/Humanitec-DemoOrg/redis-elasticache-example.git

rev: refs/heads/main

Driver input variables allow you to forward inputs to the Terraform module:

variables:

vpc_id: ${VPC_ID}

subnet_ids: ["${SUBNET_ID}"]

region: ${REGION}

environment: ${context.env.id}

app_name: ${context.app.id}

resource_name: ${context.res.id}

The ${context} variables will be populated at deployment time by the Platform Orchestrator. A specific Deployment will target a specific Environment for a specific Application and create a specific Resource.

Create the Resource Definition by running the following command:

humctl apply -f resource-definition-redis.yaml --org $HUMANITEC_ORG --token $HUMANITEC_TOKEN

Verify that the Resource Definition was created:

humctl get resource-definition production-redis --org $HUMANITEC_ORG --token $HUMANITEC_TOKEN

This will output the Resource Definition as registered with the Orchestrator.

Connect your EKS cluster

Before deploying your Application you need to instruct the Orchestrator to deploy it to your target EKS cluster. This requires a properly configured Resource Definition of type k8s-cluster. If you already have one, make sure its Matching Criteria will match your target Application for the production Environment, and skip to the next step.

Map an IAM User with the required permissions into your EKS cluster according to the instructions for connecting EKS.

Execute this snippet to prepare the Resource Definition:

cat << EOF > resource-definition-eks.yaml

apiVersion: entity.humanitec.io/v1b1

kind: Definition

metadata:

id: demo-cluster-eks

entity:

name: EKS Demo Cluster

type: k8s-cluster

driver_type: humanitec/k8s-cluster-eks

criteria:

- app_id: ${APP_ID}

env_type: production

driver_inputs:

values:

name: ${CLUSTER_NAME}

region: ${CLUSTER_REGION}

secrets:

credentials:

aws_access_key_id: ${AWS_ACCESS_KEY_ID}

aws_secret_access_key: ${AWS_SECRET_ACCESS_KEY}

EOF

Create the Resource Definition by running the following command:

humctl apply -f resource-definition-eks.yaml --org $HUMANITEC_ORG --token $HUMANITEC_TOKEN

Verify that the Resource Definition was created:

humctl get resource-definition demo-cluster-eks --org $HUMANITEC_ORG --token $HUMANITEC_TOKEN

This will output the Resource Definition as registered with the Orchestrator.

Deploy the Application to production

Now that the Resource Definitions for Redis as well as your target cluster are available, your developers can self-serve Redis in production whenever they need it.

We recommend performing a test deployment to prove everything is working as expected by deploying a redis-commander Workload (a web based Redis admin tool). Do this by creating the following Score file:

cat << EOF > example-score.yaml

apiVersion: score.dev/v1b1

metadata:

name: redis-commander

# Define the ports that this service exposes

service:

ports:

rediscommander:

port: 80 # The port that the service will be exposed on

targetPort: 8081 # The port that the container will be listening on

# Define the containers that make up this service

containers:

redis-commander:

image: rediscommander/redis-commander:latest

variables:

REDIS_HOST: \${resources.redis.host}

REDIS_PORT: \${resources.redis.port}

REDIS_PASSWORD: \${resources.redis.password}

# Define the resources that this service needs

resources:

redis: # We need a Redis to store data

type: redis

EOF

Note the variables defined for the redis-commander container. The Platform Orchestrator will populate these environment variables using the outputs of the actual redis Resource.

Perform the Deployment using the humctl CLI:

humctl score deploy \

-f example-score.yaml \

--app ${APP_ID} \

--env production \

--org ${HUMANITEC_ORG} \

--token ${HUMANITEC_TOKEN}

Note that you are targeting the production environment by specifying --env production.

You’d normally deploy to production from a CI pipeline, not the command line. This is just to prove the Resource Definition works.

Check the status of the most recent Humanitec Deployment in the production environment of your Application:

humctl get deploy . --org $HUMANITEC_ORG --app $APP_ID --env production --token $HUMANITEC_TOKEN -o yaml

The command will show status: in progress at first and switch to status: succeededwhen done. The Deployment can take five to ten minutes while the Redis cluster is being created in AWS.

Besides using the CLI, you can also check the status of the Deployment via the Orchestrator UI as shown here.

To check the availability of the Elasticache instance, use this command if you have the AWS CLI installed. Otherwise utilize the AWS admin console.

aws elasticache describe-cache-clusters --region $REGION

Your instance will show "CacheClusterStatus": “creating” at first and switch to "available" when ready.

The humctl score deploy command triggered a series of steps in the Orchestrator named “Read - Match - Create - Deploy” (RMCD). You may want to read more about it here while you are waiting. In our example, the Resource Definition production-redis was matched to the request for a Resource of type: redis because we are deploying a Resource requirement of that type to an Environment of type production, and that fulfills the Matching Criteria of our Resource Definition.

Note that the Kubernetes resources, i.e. the actual Workload, will be created last in the “Deploy” phase. You won’t see them until the Elasticache Redis provisioning is done.

Verify the result

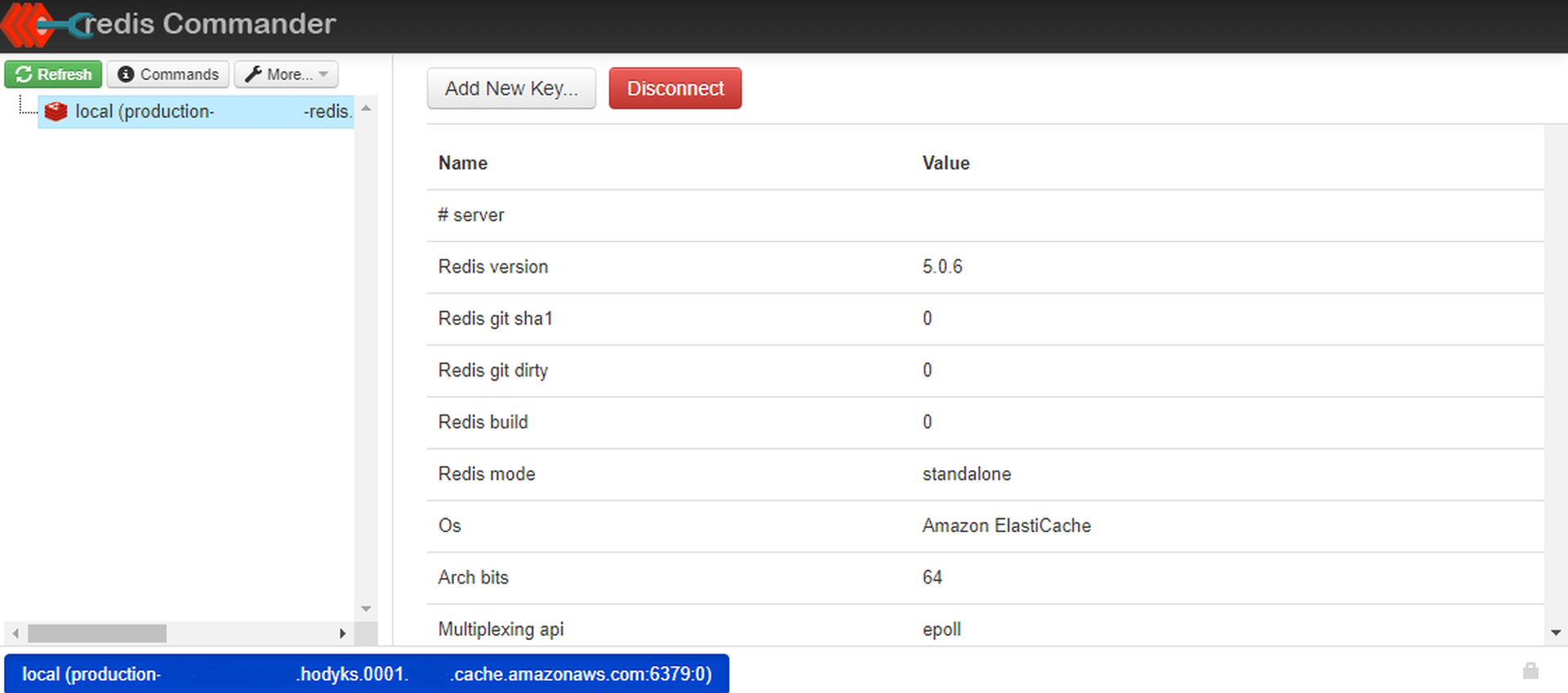

You should now have an active AWS Elasticache Redis Cluster and a deployed Workload named “redis-commander” in the production Environment of your Application. The Workload is parameterized to connect to the Redis instance through its environment variables.

Check the log output of the Redis commander container in that Environment. There are two ways to do that.

- From the left-hand navigation menu, select Applications.

- Find your Application and select the

productionEnvironment. - Select the “Active deploy”.

- Select the Workload “redis-commander”.

- Select the container “redis-commander”.

- Check the “Container logs”.

If you cannot find the container, it might be the Kubernetes resources are not deployed yet.

kubectl get pods -A \

--no-headers \

-l app.kubernetes.io/name=redis-commander \

-o custom-columns=NAMESPACE:.metadata.namespace,NAME:.metadata.name \

| xargs kubectl logs -n

If this command creates an error message, it might be the Kubernetes resources are not deployed yet.

The log should display a message like “Redis Connection production-my-app-redis.hodyks.0001.myregion.cache.amazonaws.com:6379 using Redis DB #0”.

The Redis Commander also has a web UI we can connect to. Remember that we requested a service to expose port 80 for the workload in the Score file:

service:

ports:

rediscommander:

port: 80 # The port that the service will be exposed on

targetPort: 8081 # The port that the container will be listening

Check the existence of the Kubernetes Service:

kubectl get svc -A -l app.kubernetes.io/name=redis-commander

We should see the service like this:

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

4ceda8c3-f8c3-4cd4-b855-73eec08c6b48 redis-commander ClusterIP 10.10.10.10 <none> 80/TCP 6m

Create a port forwarding from the local port 8888 to the service:

kubectl get svc -A \

-l app.kubernetes.io/name=redis-commander \

--no-headers \

-o custom-columns=NAMESPACE:.metadata.namespace \

| xargs kubectl port-forward svc/redis-commander 8888:80 -n

and open http://localhost:8888.

You should see the Redis Commander workload successfully hooked up to the Redis instance like this:

Troubleshooting

If you receive a deployment error “InvalidVpcID.NotFound: The vpc ID 'vpc-xxxxxxxxxxxxxxxxx' does not exist” in the Platform Orchestrator, verify that you configured the proper VPC region in the variables section of the Redis Resource Definition.

If you receive an error in the Redis Commander container logs “setUpConnection (R:production-my-app-redis.hodyks.0001.myregion.cache.amazonaws.com:6379:0) Redis error Error: connect ETIMEDOUT”, check that the networking setup of the VPCs you are using for EKS and Redis fulfills the requirements as per the prerequisites.

Cleaning up

Delete the production Environment of your demo Application:

humctl delete env production --org $HUMANITEC_ORG --app $APP_ID --token $HUMANITEC_TOKEN

This will also delete

- the AWS Elasticache instance created for this Environment. It may take several minutes for the deletion to begin.

- the Kubernetes objects deployed for the Workload onto the EKS cluster.

Delete the Resource Definition of type redis. This will only work after it is not in use anymore, i.e. after the Redis resource has been completely removed.

humctl delete resource-definition production-redis --org $HUMANITEC_ORG --token $HUMANITEC_TOKEN

If you created it for this tutorial, also delete the Resource Definition of type k8s-cluster. Note that this will not delete your actual EKS cluster. Delete or keep the cluster at your own discretion.

humctl delete resource-definition demo-cluster-eks --org $HUMANITEC_ORG --token $HUMANITEC_TOKEN

Remove local files:

rm resource-definition-redis.yaml

rm resource-definition-eks.yaml

rm example-score.yaml

Close the active shell to remove all of the environment variables.

Recap

Congrats! You’ve successfully completed the tutorial on how to make a Resource of type Redis available for all Environments of type production. You instructed the Platform Orchestrator to provision such a Resource by creating a Resource Definition of type redis. It utilized the Terraform driver and Terraform code read from a Git repo. That code employs the AWS Terraform provider and defines an Amazon Elasticache Redis instance.

You made sure you have a target EKS cluster to deploy your workload to by providing another Resource Definition, this time of type k8s-cluster.

You prepared a Score file defining your Application, including its Workload - a “Redis Commander” container - and a dependency on a Resource of type redis.

Finally, you deployed the Score file using the humctl tool. Matching the Resource Definitions defined earlier, the Platform Orchestrator provisioned the Redis instance, deployed the Workload to the desired EKS cluster, and wired it up so the container could connect to Redis.

Next steps

- Now you’re familiar with how to make a Resource of type Redis available for all Environments, you can expand on that knowledge in our tutorial Update Resource Definitions for related Applications.

- See how to add common infrastructure Resources to your platform based on our Resource Packs.