Get started

- What is the Platform Orchestrator?

- Prerequisites

- Create an organization

- Install the hctl CLI

- Setup demo infrastructure

- Set up the TF Orchestrator provider

- Create a runner

- Create a project and environment

- Create a module for a workload

- Create a manifest

- Perform a deployment

- Inspect the running workload

- Create a module for a database

- Add the database to the manifest

- Re-deploy

- Clean up

- Recap

- Next steps

On this page

- What is the Platform Orchestrator?

- Prerequisites

- Create an organization

- Install the hctl CLI

- Setup demo infrastructure

- Set up the TF Orchestrator provider

- Create a runner

- Create a project and environment

- Create a module for a workload

- Create a manifest

- Perform a deployment

- Inspect the running workload

- Create a module for a database

- Add the database to the manifest

- Re-deploy

- Clean up

- Recap

- Next steps

Get started using the Platform Orchestrator by following this step-by-step guide to learn all essential concepts and perform your first hands-on tasks.

At the end of this tutorial you will know how to:

- Setup your infrastructure to run Orchestrator deployments

- Describe an application and its resource requirements

- Use the Orchestrator to deploy a workload

- Use the Orchestrator to deploy dependent infrastructure resources like a database

- Observe the results and status of deployments

- Create a link from the Orchestrator to real-world resources

The tutorial flow creates some temporary infrastructure that you will clean up again at the end.

Duration: about 45min

What is the Platform Orchestrator?

Humanitec’s Platform Orchestrator unifies infrastructure management for humans and AI agents, streamlining operations, enforcing standards, and boosting developer productivity in complex environments.

Prerequisites

The tutorial currently supports using these cloud providers:

Amazon Web Services (AWS) Amazon Web Services (AWS) |

Google Cloud Platform (GCP) Google Cloud Platform (GCP) |

|

|---|---|---|

To walk through this tutorial, you’ll need:

- An account in one of the supported cloud providers to provision some temporary infrastructure to run and deploy resources

- Either the Terraform CLI or the OpenTofu CLI installed locally

- Git installed locally

- (optional) kubectl installed locally

Amazon Web Services (AWS)

- Access to an AWS project that has sufficient quota to run a Kubernetes cluster and a small RDS database

- The

awsCLI installed and authenticated. The tutorial will use this authenticated user to set up the base infrastructure on AWS

Google Cloud Platform (GCP)

When using GCP, you’ll also need:

When using GCP, you’ll also need:

- Access to an GCP project that has sufficient quota to run a Kubernetes cluster and a small Cloud SQL database

- The GCP project must have the “Cloud SQL Admin API” enabled to perform the database steps. Go to the Google Cloud console to check the status and activate the API

- The

gcloudCLI installed and authenticated. The tutorial will use this authenticated user to set up the base infrastructure on GCP

Microsoft Azure

When using Azure, you’ll also need:

- Access to an Azure subscription that has sufficient quota to run an AKS cluster and a small Azure Database for PostgreSQL instance

- The

azCLI installed and authenticated. The tutorial will use this authenticated user to set up the base infrastructure on Azure - The

kubeloginCLI installed. This is required by the Kubernetes TF provider to authenticate against AKS

Create an organization

Organizations are the top level grouping of all your Orchestrator objects, similar to “tenants” in other tools. Your real-world organization or company will usually have one Orchestrator organization.

If you already have an organization, you’re good. Otherwise, register for a free trial organization now.

Once done, you will have access to the Orchestrator console at https://console.humanitec.dev .

Install the hctl CLI

Follow the instructions on the CLI page to install the hctl CLI if it is not already present on your system.

Ensure you are logged in. Execute this command and follow the instructions:

hctl login

Ensure that the CLI is configured to use your Orchestrator organization:

hctl config show

If the default_org_id is empty, set the organization to your value like this:

hctl config set-org your-org-id

All subsequent hctl commands will now be targeted at your organization.

Setup demo infrastructure

This tutorial requires some demo infrastructure to run things. Most notably, it creates a publicly accessible Kubernetes cluster to host both a runner component and to deploy a workload to.

We have prepared a public repository with Terraform/OpenTofu (“TF”) code to set it up in the cloud of your choice.

Clone the repository and navigate to the infra directory:

git clone https://github.com/humanitec-tutorials/get-started

cd get-started/infra

The repository comes with a terraform.tfvars.template file. Copy this file and remove the template extension:

cp terraform.tfvars.template terraform.tfvars

Edit the new terraform.tfvars file and provide all necessary values as instructed by the inline comments in the section for your selected cloud provider.

Activate the Terraform/OpenTofu file for your selected cloud provider (make sure to use only one):

AWS

mv aws.tf.useme aws.tf

GCP

mv gcp.tf.useme gcp.tf

Azure

mv azure.tf.useme azure.tf

Initialize your Terraform or OpenTofu environment and run apply:

Terraform

terraform init

terraform apply

OpenTofu

tofu init

tofu apply

Check the output to see which resources will be created, and confirm. This may take five minutes or more.

To continue with the tutorial while the apply is being executed, open a new shell and navigate to the ./orchestrator directory:

cd <your-repo-checkout-location>/get-started/orchestrator

You now have:

- ✅ Created a generic infrastructure which will serve to run deployments and spin up resources

Set up the TF Orchestrator provider

Copy variable values from the demo infrastructure

Copy the existing terraform.tfvars file from the infra directory into the present orchestrator directory to re-use them:

cp ../infra/terraform.tfvars .

Prepare the TF core setup

Open the terraform.tfvars file (the one in the current orchestrator directory) and append the following configuration, replacing the organization value with yours.

# ===================================

# Platform Orchestrator configuration

# ===================================

# Your Orchestrator organization ID

orchestrator_org = "replace-with-your-orchestrator-organization-id"

- Save the file.

You will now set up the Platform Orchestrator provider for Terraform/OpenTofu to maintain Orchestrator resources in your organization.

Create a new file named providers.tf in the current orchestrator directory, containing this content:

terraform {

required_providers {

# Provider for managing Orchestrator objects

platform-orchestrator = {

source = "humanitec/platform-orchestrator"

version = "~> 2"

}

}

}

You don’t need to explicitly configure the Orchestrator TF provider with the organization ID and token as the provider falls back to the $HOME/.config/hctl file set by running hctl login.

You now have:

- ✅ Configured the Orchestrator TF provider locally so that you can now manage Orchestrator objects via IaC

Create a runner

Runners are used by the Orchestrator to execute Terraform/OpenTofu modules securely inside your own infrastructure.

You will now define a runner that is using the demo infrastructure created for this tutorial.

You also define a runner rule. Runner rules are attached to runners and define under which circumstances to use which runner.

There are different runner types. This tutorial uses the kubernetes-agent runner which works on all kinds of Kubernetes clusters. It is installed into the demo cluster via a Helm chart and, for simplicity, will maintain TF state in Kubernetes secrets.

apply for the demo infrastructure to be finished. Verify now that this is the case by checking your shell, which may be another window.Make sure you are in the orchestrator directory in your shell.

Create a private/public key pair for the runner to authenticate against the Orchestrator:

openssl genpkey -algorithm ed25519 -out runner_private_key.pem

openssl pkey -in runner_private_key.pem -pubout -out runner_public_key.pem

Prepare additional variables. This command will create a new variables file orchestrator.tfvars, using values from the outputs of the prior demo infrastructure setup:

Terraform

cat << EOF > orchestrator.tfvars

enabled_cloud_provider = "$(terraform output -state "../infra/terraform.tfstate" -json | jq -r ".enabled_cloud_provider.value")"

k8s_cluster_name = "$(terraform output -state "../infra/terraform.tfstate" -json | jq -r ".k8s_cluster_name.value")"

cluster_ca_certificate = "$(terraform output -state "../infra/terraform.tfstate" -json | jq -r ".cluster_ca_certificate.value")"

k8s_cluster_endpoint = "$(terraform output -state "../infra/terraform.tfstate" -json | jq -r ".k8s_cluster_endpoint.value")"

agent_runner_irsa_role_arn = "$(terraform output -state "../infra/terraform.tfstate" -json | jq -r ".agent_runner_irsa_role_arn.value")"

gcp_agent_runner_service_account_email = "$(terraform output -state "../infra/terraform.tfstate" -json | jq -r ".gcp_agent_runner_service_account_email.value")"

azure_agent_runner_managed_identity_client_id = "$(terraform output -state "../infra/terraform.tfstate" -json | jq -r ".azure_agent_runner_managed_identity_client_id.value")"

azure_tenant_id = "$(terraform output -state "../infra/terraform.tfstate" -json | jq -r ".azure_tenant_id.value")"

EOF

OpenTofu

cat << EOF > orchestrator.tfvars

enabled_cloud_provider = "$(tofu output -state "../infra/terraform.tfstate" -json | jq -r ".enabled_cloud_provider.value")"

k8s_cluster_name = "$(tofu output -state "../infra/terraform.tfstate" -json | jq -r ".k8s_cluster_name.value")"

cluster_ca_certificate = "$(tofu output -state "../infra/terraform.tfstate" -json | jq -r ".cluster_ca_certificate.value")"

k8s_cluster_endpoint = "$(tofu output -state "../infra/terraform.tfstate" -json | jq -r ".k8s_cluster_endpoint.value")"

agent_runner_irsa_role_arn = "$(tofu output -state "../infra/terraform.tfstate" -json | jq -r ".agent_runner_irsa_role_arn.value")"

gcp_agent_runner_service_account_email = "$(tofu output -state "../infra/terraform.tfstate" -json | jq -r ".gcp_agent_runner_service_account_email.value")"

azure_agent_runner_managed_identity_client_id = "$(tofu output -state "../infra/terraform.tfstate" -json | jq -r ".azure_agent_runner_managed_identity_client_id.value")"

azure_tenant_id = "$(tofu output -state "../infra/terraform.tfstate" -json | jq -r ".azure_tenant_id.value")"

EOF

Verify all values are set. If they are not, the apply run for the demo infrastructure may not be finished yet. Wait for this to be done, and repeat the previous command.

cat orchestrator.tfvars

Open the file providers.tf and add this configuration to the required_providers block:

# Provider for installing the runner Helm chart

helm = {

source = "hashicorp/helm"

version = "~> 3"

}

# Provider for installing K8s objects for the runner

kubernetes = {

source = "hashicorp/kubernetes"

version = "~> 2"

}

# Provider for reading local files

local = {

source = "hashicorp/local"

version = ">= 2.0"

}

Create a new file named orchestrator.tf in the current orchestrator directory, containing this configuration:

data "local_file" "agent_runner_public_key" {

filename = "./runner_public_key.pem"

}

data "local_file" "agent_runner_private_key" {

filename = "./runner_private_key.pem"

}

# Configure the Kubernetes provider for accessing the demo cluster

provider "kubernetes" {

host = var.k8s_cluster_endpoint

cluster_ca_certificate = base64decode(var.cluster_ca_certificate)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

args = var.enabled_cloud_provider == "aws" ? ["eks", "get-token", "--output", "json", "--cluster-name", var.k8s_cluster_name, "--region", var.aws_region] : var.enabled_cloud_provider == "azure" ? ["get-token", "--login", "azurecli", "--server-id", "6dae42f8-4368-4678-94ff-3960e28e3630"] : null

command = var.enabled_cloud_provider == "aws" ? "aws" : var.enabled_cloud_provider == "azure" ? "kubelogin" : "gke-gcloud-auth-plugin"

}

}

# Configure the Helm provider to use the cloud CLI for K8s auth

provider "helm" {

kubernetes = {

host = var.k8s_cluster_endpoint

cluster_ca_certificate = base64decode(var.cluster_ca_certificate)

exec = {

api_version = "client.authentication.k8s.io/v1beta1"

args = var.enabled_cloud_provider == "aws" ? ["eks", "get-token", "--output", "json", "--cluster-name", var.k8s_cluster_name, "--region", var.aws_region] : var.enabled_cloud_provider == "azure" ? ["get-token", "--login", "azurecli", "--server-id", "6dae42f8-4368-4678-94ff-3960e28e3630"] : null

command = var.enabled_cloud_provider == "aws" ? "aws" : var.enabled_cloud_provider == "azure" ? "kubelogin" : "gke-gcloud-auth-plugin"

}

}

}

# Install the Kubernetes agent runner Helm chart and its prerequisites and register the runner with the Orchestrator

module "kubernetes_agent_runner" {

source = "github.com/humanitec-tf-modules/kubernetes-agent-orchestrator-runner"

humanitec_org_id = var.orchestrator_org

private_key_path = "./runner_private_key.pem"

public_key_path = "./runner_public_key.pem"

# Workload Identity configuration - link to the cloud IAM identity created above

service_account_annotations = var.enabled_cloud_provider == "aws" ? {

"eks.amazonaws.com/role-arn" = var.agent_runner_irsa_role_arn

} : var.enabled_cloud_provider == "azure" ? {

"azure.workload.identity/client-id" = var.azure_agent_runner_managed_identity_client_id

} : {

"iam.gke.io/gcp-service-account" = var.gcp_agent_runner_service_account_email

}

pod_template = var.enabled_cloud_provider == "azure" ? jsonencode({

metadata = {

labels = {

"azure.workload.identity/use" = "true"

}

}

}) : null

}

# Assign a pre-existing ClusterRole to the service account used by the runner

# to enable the runner to create deployments in other namespaces

resource "kubernetes_cluster_role_binding" "runner_inner_cluster_admin" {

metadata {

name = "humanitec-kubernetes-agent-runner-cluster-admin"

}

role_ref {

api_group = "rbac.authorization.k8s.io"

kind = "ClusterRole"

name = "admin"

}

subject {

kind = "ServiceAccount"

name = module.kubernetes_agent_runner.k8s_job_service_account_name

namespace = module.kubernetes_agent_runner.k8s_job_namespace

}

}

Re-initialize your Terraform or OpenTofu environment and run another apply:

Terraform

terraform init

terraform apply -var-file=orchestrator.tfvars

OpenTofu

tofu init

tofu apply -var-file=orchestrator.tfvars

While the apply executes, inspect the resources now being added. Refer to the inline comments for an explanation of each resource.

You now have:

- ✅ Installed a runner component into the demo infrastructure

- ✅ Configured the runner with a key pair

- ✅ Enabled the runner to maintain TF state

- ✅ Registered the runner with the Orchestrator

The runner is now ready to execute deployments, polling the Orchestrator for deployment requests.

Create a project and environment

Prepare the Orchestrator to receive deployments by creating a project with one environment which will be the deployment target.

Projects are the home for a project or application team of your organization. A project is a collection of one or more environments.

Environments are a sub-partitioning of projects. They are usually isolated instantiations of the same project representing a stage in its development lifecycle, e.g. from “development” through to “production”. Environments receive deployments.

Environments are classified by environment types, so you need to create one of these as well.

An environment needs to have a runner assigned to it at all times, so you also create a runner rule. Runner rules are attached to runners and define under which circumstances to use which runner. The runner rule will assign the runner you just created to the new environment.

Open the file orchestrator.tf and append this configuration:

# Create a project

resource "platform-orchestrator_project" "get_started" {

id = "get-started"

}

# Create a runner rule

resource "platform-orchestrator_runner_rule" "get_started" {

runner_id = module.kubernetes_agent_runner.runner_id

project_id = platform-orchestrator_project.get_started.id

}

# Create an environment type

resource "platform-orchestrator_environment_type" "get_started_development" {

id = "get-started-development"

}

# Create an environment "development" in the project "get-started"

resource "platform-orchestrator_environment" "development" {

id = "development"

project_id = platform-orchestrator_project.get_started.id

env_type_id = platform-orchestrator_environment_type.get_started_development.id

# Ensure the runner rule is in place so that the Orchestrator may assign a runner to the environment

depends_on = [platform-orchestrator_runner_rule.get_started]

}

Perform another apply:

Terraform

terraform apply -var-file=orchestrator.tfvars

OpenTofu

tofu apply -var-file=orchestrator.tfvars

The plan will now output the new Orchestrator objects to be added. Confirm the apply to do so.

You now have:

- ✅ Created a logical project and environment to serve as a deployment target

Create a module for a workload

You will now set up the Orchestrator for deploying a simple workload as a Kubernetes Deployment to the demo cluster.

Enabling the Orchestrator to deploy things is done through a module. Modules describe how to provision a real-world resource of a resource type by referencing a Terraform/OpenTofu module.

The Orchestrator is designed around the Terraform /OpenTofu (TF) module architecture. It supports other IaC tools only if they can be wrapped by Terraform/OpenTofu code.

Every module is of a resource type. Resource types define the formal structure for a kind of real-world resource such as an Amazon S3 bucket or PostgreSQL database.

You also need a simple module rule. Module rules are attached to modules and define under which circumstances to use which module.

You will now create one object of each kind. They are all rather simple. The module itself wraps an existing TF module from a public Git source.

Open the file orchestrator.tf and add this configuration to define the Orchestrator objects previously mentioned:

# Create a resource type "k8s-workload-get-started" with an empty output schema

resource "platform-orchestrator_resource_type" "k8s_workload_get_started" {

id = "k8s-workload-get-started"

description = "Kubernetes workload for the Get started tutorial"

output_schema = jsonencode({

type = "object"

properties = {}

})

is_developer_accessible = true

}

# Create a module, setting values for the module variables

resource "platform-orchestrator_module" "k8s_workload_get_started" {

id = "k8s-workload-get-started"

description = "Simple Kubernetes Deployment in default namespace"

resource_type = platform-orchestrator_resource_type.k8s_workload_get_started.id

module_source = "git::https://github.com/humanitec-tutorials/get-started//modules/workload/kubernetes"

module_params = {

image = {

type = "string"

description = "The image to use for the container"

}

variables = {

type = "map"

is_optional = true

description = "Container environment variables"

}

}

module_inputs = jsonencode({

name = "get-started"

namespace = "default"

})

}

# Create a module rule making the module applicable to the demo project

resource "platform-orchestrator_module_rule" "k8s_workload_get_started" {

module_id = platform-orchestrator_module.k8s_workload_get_started.id

project_id = platform-orchestrator_project.get_started.id

}

Perform another apply:

Terraform

terraform apply -var-file=orchestrator.tfvars

OpenTofu

tofu apply -var-file=orchestrator.tfvars

To inspect the TF module code referenced by the module, go to the GitHub source .

The Orchestrator is now configured to deploy resources of the new type k8s-workload-get-started. Verify it by querying the available resource types for the target project environment:

hctl get available-resource-types get-started development

You now have:

- ✅ Instructed the Orchestrator how to deploy a containerized workload via a module

- ✅ Instructed the Orchestrator when to use the module via a module rule

Create a manifest

You can now utilize the Orchestator configuration prepared in the previous steps and perform an actual deployment using the manifest file. Manifests allow developers to submit the description of the desired state for an environment. A manifest is the main input for performing a deployment.

Create a new manifests directory and change into it:

cd ..

mkdir manifests

cd manifests

Create a new file manifest-1.yaml in the manifests directory, containing this configuration:

workloads:

# The name you assign to the workload in the context of the manifest

demo-app:

resources:

# The name you the assign to this resource in the context of the manifest

demo-workload:

# The resource type of the resource you wish to provision

type: k8s-workload-get-started

# The resource parameters. They are mapped to the module_params of the module

params:

image: ghcr.io/astromechza/demo-app:latest

The manifest is a high-level abtraction of the demo-app made up of a resource of type k8s-workload-get-started.

Manifests are generally owned and maintained by application developers as a part of their code base, and used to self-serve all kinds of resources. You do not install them into the Orchestrator using hctl or TF like the previous configuration objects.

You now have:

- ✅ Described an application and its resource requirements in an abstracted, environment-agnostic way called a manifest

Perform a deployment

Verify that the demo infrastructure provisioning which may be running in another shell is complete before proceeding.

Perform a deployment of the manifest into the development environment of the get-started project:

hctl deploy get-started development manifest-1.yaml

Confirm the deployment and wait for the execution to finish. It should take less than a minute.

Upon completion, the CLI outputs a hctl logs command to access the deployment logs. Use it to see the execution details.

Run this command to see all prior deployments:

hctl get deployments

Copy the ID from the top of list and inspect the details by using the ID in this command:

hctl get deployment the-id-you-copied

Inspect the result in the Orchestrator console:

- Open the Orchestrator web console at https://console.humanitec.dev/

- In the Projects view, select the get-started project

- Select the development environment

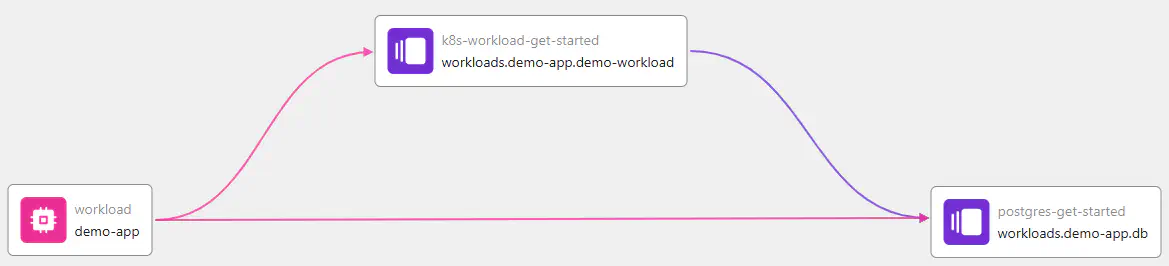

You will see a “Successful” status and a graph containing two nodes. This is the resource graph for the latest deployment. The resource graph shows the dependencies between all active resources in an environment.

Click on the resources to explore their details. In particular, inspect the “Metadata” section of the demo-workload resource. By convention, the Orchestrator will display the content of a regular output named humanitec_metadata of the TF module being used for a resource. This is useful for conveying insights about the real-life object that has been provisioned via the Orchestrator.

Defining metadata output in the module source

outputs.tf

(view on GitHub )

:

output "humanitec_metadata" {

description = "Metadata for the Orchestrator"

value = merge(

{

"Namespace" = var.namespace

},

{

"Image" = var.image

},

{

"Deployment" = kubernetes_deployment.simple.metadata[0].name

}

)

}

You now have:

- ✅ Used the manifest to request a deployment from the Orchestrator into a specific target environment

Inspect the running workload

If you have kubectl available, you can inspect the demo cluster to see the running workload. Otherwise, skip to the next section.

If you have not already set the kubectl context, use this convenience command to display the required command:

Terraform

terraform output -state "../infra/terraform.tfstate" -json \

| jq -r ".k8s_connect_command.value"

OpenTofu

tofu output -state "../infra/terraform.tfstate" -json \

| jq -r ".k8s_connect_command.value"

Copy and execute the command that is displayed. kubectl will now target the demo cluster. Verify the context is set correctly:

kubectl config current-context

The module created a Kubernetes Deployment in the default namespace. Verify it exists:

kubectl get deployments

The demo image being used has a simple web interface. Create a port-forward to the Pod to access it:

kubectl port-forward pod/$(kubectl get pods -l app=get-started -o json \

| jq -r ".items[0].metadata.name") 8080:8080

Open http://localhost:8080 to see the running workload.

In the output you see, note the message at the bottom about no postgres being configured. As the last and final step in this tutorial, you will add a simple PostgreSQL database to the deployment and connect the workload to it.

Quit the port-forward and continue.

You now have:

- ✅ Verified your workload is indeed runnning on the target environment

Create a module for a database

You will now extend the capabilities of the Orchestrator and enable it to provision a database to be used by the workload. The tutorial uses PostgreSQL as an example.

Just like for the workload previously, that involves creating a resource type, a module encapsulating the TF code, and a module rule.

The PostgreSQL database will use a managed service offering from your cloud provider and the appropriate TF provider:

AWS: RDS and the

hashicorp/awsprovider using workload identity (IRSA) for provider authentication GCP: Cloud SQL and the

GCP: Cloud SQL and the hashicorp/googleprovider using workload identity and an IAM service account for provider authenticationAzure: Azure Database for PostgreSQL Flexible Server and the

hashicorp/azurermprovider using AKS workload identity for provider authentication

Remember that the TF code for provisioning this database will be executed by the runner on your demo infrastructure. The provider configuration must receive appropriate authentication data just like in any other TF execution. To achieve this, you will also define a provider in the Orchestrator. Providers are the reusable direct equivalent of Terraform/OpenTofu providers that may be injected into the TF code referenced in modules.

Return to the orchestrator folder:

cd ../orchestrator

Open the file orchestrator.tf and append this configuration to define the Orchestrator objects previously mentioned.

The first part is cloud provider agnostic:

# Create a resource type "postgres-get-started"

resource "platform-orchestrator_resource_type" "postgres_get_started" {

id = "postgres-get-started"

description = "Postgres instance for the Get started tutorial"

is_developer_accessible = true

output_schema = jsonencode({

type = "object"

properties = {

host = {

type = "string"

}

port = {

type = "integer"

}

database = {

type = "string"

}

username = {

type = "string"

}

password = {

type = "string"

}

}

})

}

The next part is cloud provider specific as it involves defining and using a provider. Append to orchestrator.tf:

AWS

# Create a provider leveraging EKS workload identity

# Because workload identity has already been configured on the cluster,

# the provider configuration can be effectively empty

resource "platform-orchestrator_provider" "get_started" {

id = "get-started"

description = "aws provider for the Get started tutorial"

provider_type = "aws"

source = "hashicorp/aws"

version_constraint = "~> 6"

}

# Create a module "postgres-get-started"

resource "platform-orchestrator_module" "postgres_get_started" {

id = "postgres-get-started"

description = "Simple cloud-based Postgres instance"

resource_type = platform-orchestrator_resource_type.postgres_get_started.id

module_source = "git::https://github.com/humanitec-tutorials/get-started//modules/postgres/${var.enabled_cloud_provider}"

provider_mapping = {

aws = "${platform-orchestrator_provider.get_started.provider_type}.${platform-orchestrator_provider.get_started.id}"

}

}

GCP

# Create a provider leveraging GKE workload identity

# Because workload identity has already been configured on the cluster,

# the provider configuration does not require any further authentication data

resource "platform-orchestrator_provider" "get_started" {

id = "get-started"

description = "google provider for the Get started tutorial"

provider_type = "google"

source = "hashicorp/google"

version_constraint = "~> 7"

configuration = jsonencode({

project = var.gcp_project_id

region = var.gcp_region

})

}

# Create a module "postgres-get-started"

resource "platform-orchestrator_module" "postgres_get_started" {

id = "postgres-get-started"

description = "Simple cloud-based Postgres instance"

resource_type = platform-orchestrator_resource_type.postgres_get_started.id

module_source = "git::https://github.com/humanitec-tutorials/get-started//modules/postgres/${var.enabled_cloud_provider}"

provider_mapping = {

google = "${platform-orchestrator_provider.get_started.provider_type}.${platform-orchestrator_provider.get_started.id}"

}

}

Azure

# Create a provider leveraging AKS workload identity

# Because workload identity has already been configured on the cluster,

# the provider configuration does not require any further authentication data

resource "platform-orchestrator_provider" "get_started" {

id = "get-started"

description = "azurerm provider for the Get started tutorial"

provider_type = "azurerm"

source = "hashicorp/azurerm"

version_constraint = "~> 4"

configuration = jsonencode({

use_aks_workload_identity = true

use_cli = false

subscription_id = var.azure_subscription_id

tenant_id = var.azure_tenant_id

"features[0]" = {}

})

}

# Create a module "postgres-get-started"

resource "platform-orchestrator_module" "postgres_get_started" {

id = "postgres-get-started"

description = "Simple cloud-based Postgres instance"

resource_type = platform-orchestrator_resource_type.postgres_get_started.id

module_source = "git::https://github.com/humanitec-tutorials/get-started//modules/postgres/${var.enabled_cloud_provider}"

provider_mapping = {

azurerm = "${platform-orchestrator_provider.get_started.provider_type}.${platform-orchestrator_provider.get_started.id}"

}

}

The last part is creating a module rule and is provider agnostic once more. Append to orchestrator.tf:

# Create a module rule making the module applicable to the demo project

resource "platform-orchestrator_module_rule" "postgres_get_started" {

module_id = platform-orchestrator_module.postgres_get_started.id

project_id = platform-orchestrator_project.get_started.id

}

Perform another apply:

Terraform

terraform apply -var-file=orchestrator.tfvars

OpenTofu

tofu apply -var-file=orchestrator.tfvars

Verify that the new resource type postgres-get-started is available:

hctl get available-resource-types get-started development

You now have:

- ✅ Instructed the Orchestrator how to provision a PostgreSQL database by providing another module and module rule

- ✅ Configured a TF provider which can be injected into upcoming executions to access the target infrastructure

Add the database to the manifest

Create another manifest that in addition to the workload requests a PostgreSQL database and connects the workload to it.

Return to the manifests directory::

cd ../manifests

Create a new file manifest-2.yaml containing this configuration. It expands the previous manifest to request a database resource and set a container environment variable, using outputs from the database resource to construct a connection string. Note the elements marked NEW:

workloads:

# The name you assign to the workload in the context of the manifest

demo-app:

resources:

# The name of the workload resource in the context of the manifest

demo-workload:

# The resource type of the workload resource you wish to provision

type: k8s-workload-get-started

# The resource parameters. They are mapped to the module_params of the module

params:

image: ghcr.io/astromechza/demo-app:latest

variables:

# NEW: This environment variable is used by the demo image to create a connection to a postgres database

OVERRIDE_POSTGRES: postgres://${resources.db.outputs.username}:${resources.db.outputs.password}@${resources.db.outputs.host}:${resources.db.outputs.port}/${resources.db.outputs.database}

# NEW: The name of the database resource in the context of the manifest

db:

# NEW: The resource type of the database resource you wish to provision

type: postgres-get-started

Requesting and connecting the database resource is literally a matter of a few lines added to the manifest file. The Orchestrator configuration you created previously (resource type, module, module rule, provider) will execute this request.

You now have:

- ✅ Extended the manifest to request an additional database resource

- ✅ Extended the manifest to inject outputs from the new database resource into the workload

Re-deploy

Perform another deployment into the same development environment of the get-started project using the new manifest file:

hctl deploy get-started development manifest-2.yaml

This new deployment will take a few minutes as it now creates a managed PostgreSQL instance. Use that time to inspect the TF code behind the database module. Expand the section below to see it.

Note that the module has a required_providers block but it does not include a provider block for configuring providers. Instead, the Orchestrator will inject the provider configuration at deploy time based on the provider object you created earlier and mapped into the module via the provider_mapping.

Database module main code (AWS)

main.tf

(view on GitHub )

:

# Module for creating a simple default RDS PostgreSQL database

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 6"

}

random = {

source = "hashicorp/random"

version = "~> 3.0"

}

}

}

locals {

db_name = "get_started"

db_username = "get_started"

}

data "aws_region" "current" {}

resource "random_string" "db_password" {

length = 12

lower = true

upper = true

numeric = true

special = false

}

resource "aws_db_instance" "get_started_rds_postgres_instance" {

allocated_storage = 5

engine = "postgres"

identifier = "get-started-rds-db-instance"

instance_class = "db.t4g.micro"

storage_encrypted = true

publicly_accessible = true

delete_automated_backups = true

skip_final_snapshot = true

db_name = local.db_name

username = local.db_username

password = random_string.db_password.result

apply_immediately = true

multi_az = false

dedicated_log_volume = false

}

# Need to open access to the database port via a security rule despite "publicly_accessible"

resource "aws_security_group_rule" "get_started_postgres_access" {

type = "ingress"

from_port = aws_db_instance.get_started_rds_postgres_instance.port

to_port = aws_db_instance.get_started_rds_postgres_instance.port

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

security_group_id = tolist(aws_db_instance.get_started_rds_postgres_instance.vpc_security_group_ids)[0]

}

Database module main code (GCP)

main.tf

(view on GitHub )

:

# Module for creating a simple default CloudSQL PostgreSQL database

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "~> 7"

}

random = {

source = "hashicorp/random"

version = "~> 3.0"

}

}

}

locals {

db_name = "get_started"

db_username = "get_started"

}

data "google_client_config" "this" {}

resource "random_string" "root_password" {

length = 12

lower = true

upper = true

numeric = true

special = false

}

resource "random_string" "db_password" {

length = 12

lower = true

upper = true

numeric = true

special = false

}

# Minimalistic Postgres CloudSQL instance accessible from anywhere

resource "google_sql_database_instance" "instance" {

name = "get-started-cloudsql-db-instance"

project = data.google_client_config.this.project

region = data.google_client_config.this.region

database_version = "POSTGRES_18"

root_password = random_string.root_password.result

settings {

tier = "db-custom-2-7680"

edition = "ENTERPRISE"

ip_configuration {

authorized_networks {

name = "anywhere"

value = "0.0.0.0/0"

}

}

data_cache_config {

data_cache_enabled = false

}

backup_configuration {

enabled = false

}

}

deletion_protection = false

}

# User for the instance

resource "google_sql_user" "builtin_user" {

name = local.db_username

password = random_string.db_password.result

instance = google_sql_database_instance.instance.name

type = "BUILT_IN"

}

# Database in the CloudSQL instance

resource "google_sql_database" "database" {

name = local.db_name

instance = google_sql_database_instance.instance.name

}

Database module main code (Azure)

main.tf

(view on GitHub )

:

# Module for creating a simple default Azure Database for PostgreSQL Flexible Server

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~> 4"

}

random = {

source = "hashicorp/random"

version = "~> 3.0"

}

}

}

locals {

db_name = "get_started"

db_username = "get_started"

}

data "azurerm_resource_group" "get_started" {

name = "get-started-rg"

}

resource "random_string" "db_password" {

length = 12

lower = true

upper = true

numeric = true

special = false

}

# Minimalistic Postgres Flexible Server instance accessible from anywhere

resource "azurerm_postgresql_flexible_server" "get_started" {

name = "get-started-postgres"

resource_group_name = data.azurerm_resource_group.get_started.name

location = data.azurerm_resource_group.get_started.location

version = "16"

administrator_login = local.db_username

administrator_password = random_string.db_password.result

storage_mb = 32768

sku_name = "B_Standard_B1ms"

backup_retention_days = 7

}

# Firewall rule to allow access from anywhere

resource "azurerm_postgresql_flexible_server_firewall_rule" "allow_all" {

name = "allow-all"

server_id = azurerm_postgresql_flexible_server.get_started.id

start_ip_address = "0.0.0.0"

end_ip_address = "255.255.255.255"

}

# Database in the Flexible Server instance

resource "azurerm_postgresql_flexible_server_database" "get_started" {

name = local.db_name

server_id = azurerm_postgresql_flexible_server.get_started.id

collation = "en_US.utf8"

charset = "UTF8"

}

Control over the providers and the authentication mechanisms they use therefore lies with the Orchestrator and is governed by the platform engineers.

Once the deployment finished, return to the Orchestrator console at https://console.humanitec.dev and open the development environment of the get-started project.

The resource graph now contains an additional active resource node for the PostgreSQL database. Click on the resource to explore its details. It exposes set of metadata on the real-world database resource, including a link to the cloud console or portal. Click the link to view it.

If you have kubectl available and your context set, you may again connect to the running pod:

kubectl port-forward pod/$(kubectl get pods -l app=get-started -o json \

| jq -r ".items[0].metadata.name") 8080:8080

Open http://localhost:8080 to see the status output. The demo workload now displays the message Database table count result: 0, which means that it could connect to the PostgreSQL database and found it to be empty, which is expected.

Quit the port-forward and continue.

You now have:

- ✅ Expanded the running deployment by a PostgreSQL database instance

Clean up

Once you are done exploring, clean up the objects you created in the process.

-

Delete the Orchestrator environment first. This will make the Orchestrator execute a

destroyon all the resources in the environment, and may therefore take several minutes.Navigate to the

orchestratordirectory:cd ../orchestrator

Terraform

terraform destroy -target="platform-orchestrator_environment.development" \

-var-file=orchestrator.tfvars

OpenTofu

tofu destroy -target="platform-orchestrator_environment.development" \

-var-file=orchestrator.tfvars

Once complete, if you now inspect the cluster and your cloud console again, you will find both the workload and the database resource gone.

- Destroy the remaining Orchestrator configuration objects.

Terraform

terraform destroy -var-file=orchestrator.tfvars

OpenTofu

tofu destroy -var-file=orchestrator.tfvars

-

Destroy all demo infrastructure created via TF. This will take several minutes.

Navigate to the

infradirectory:cd ../infra

Then execute the destroy:

Terraform

terraform destroy

OpenTofu

tofu destroy

- Finally, remove the local directory containing the cloned repository and your additions. If you want to keep them for later to review things, skip this step.

cd ../../

rm -rf get-started

Recap

This concludes the tutorial. You have learned how to:

- ✅ Setup your infrastructure to run Orchestrator deployments

- ✅ Describe an application and its resource requirements

- ✅ Use the Orchestrator to deploy a workload

- ✅ Use the Orchestrator to deploy dependent infrastructure resources like a database

- ✅ Observe the results and status of deployments

- ✅ Create a link from the Orchestrator to real-world resources

The tutorial content made some choices we would like to highlight and make you aware of the options you have.

Runner compute vs. target compute: the tutorial uses the same compute (here: a Kubernetes cluster) to run both your runners and and an actual application workload. In a real-life setting, you may want to use a dedicated runner compute instance to reduce the risk of interference with applications.

Containerized and non-containerized workloads: the tutorial uses a containerized workload on Kubernetes as an example, but a “workload” can really have any shape, fitting its execution environment. You may deploy any flavor of workload artefacts by providing the proper module and TF code.

Workload and/or infrastructure: the tutorial deploys both a workload and a piece of infrastructure, but it can be just one of the two as well. You describe what is to be deployed via the manifest.

Next steps

- Go here to follow an advanced showcase deploying workloads to VMs